Analyzing raw log file for SEO

What is a web server’s raw log?

Have you heard of a web server’s access or error log, also known as “raw logs”? Have you seen a raw log? Here are a few examples of raw logs.

Access log

www.principle-c.com xx.xx.xx.xx – – [28/Aug/2018:00:51:26 +0900] “GET /column/marketing/google-optimize-additionalfunctions/ HTTP/1.1” 200 31248 “-” “Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)” www.principle-c.com xx.xx.xx.xx – – [28/Aug/2018:00:59:26 +0900] “GET /column/global-marketing/youtube_big_brand/ HTTP/1.1” 200 21127 “https://www.google.co.jp/” “Mozilla/5.0 (iPhone; CPU iPhone OS 11_0_2 like Mac OS X) AppleWebKit/604.1.38 (KHTML, like Gecko) Version/11.0 Mobile/15A421 Safari/604.1”

Error Log

[Thu Mar 01 10:38:15.774578 2018] [access_limit:warn] [pid 88859] [client xx.xx.xx.xx:0] AccessExceededNumber [Thu Mar 01 10:38:15.776677 2018] [access_limit:warn] [pid 88628] [client xx.xx.xx.xx:0] AccessExceededNumber [Thu Mar 01 10:38:15.776731 2018] [access_limit:warn] [pid 88628] [client xx.xx.xx.xx:0] AccessExceededNumber [Thu Mar 01 10:38:15.777388 2018] [access_limit:warn] [pid 88869] [client xx.xx.xx.xx:0] AccessExceededNumber

* The format of the raw log can vary depending on the type of web server (Apache, Microsoft Internet Information Services (IIS), Nginx, etc) while it is also possible to customize your own. So, your raw log may not look exactly like the one I have shown here. Nonetheless, you now have a basic idea of what a raw log looks like.

* The 「xx.xx.xx.xx」 portion of the raw logs are actually IP addresses. For security and privacy reasons, I have replaced the numbers with x’s.

Once a web server receives a request, that request’s details and results will be saved and recorded as a log file as shown above. If you take a closer look at the content, the information listed below is included.

- Requested target domain (www.principle-c.com for example): There are cases where a virtual domain is set up and one web server operates multiple sites, so it is common that the domain is specified in the log.

- IP address of the request source (xx.xx.xx.xx)

- Date and time of the request (28/Aug/2018:00:51:26 +0900 for example)

- Content of the request (GET /column/marketing/google-optimize-additionalfunctions/ for example)– In this case it means that the /column/marketing/google-optimize-additionalfunctions/ had a GET request (get page).

- Response code (example: “200”) — It is the response code that the server returned in response to the request.

- Referrer (https://www.google.co.jp/ for example)– This is the URL of the page viewed before visiting the site.

- User Agent: ((Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) for example)

- It is a program used to access websites and browsers. It may also contain hardware information. Check the user agent when determining if the request is from a crawler such as googlebot.

How is raw log analysis useful?

Get a deeper understanding of crawler behavior and API access

From doing raw log analysis, you can better understand crawl behavior and more details for search engine crawlers such as Google or Bing, or service sites such as Ahrefs (https://ahrefs.com/) and WebMeUp (https://webmeup.com/), and finally academic and research institution crawlers. In addition, if your company provides some API externally, it is also possible to understand the access status to that API through raw logs.

You can see how often Google crawls your site by looking at the “Crawl Stats” report in Search Console.

It is not possible to see which pages the crawlers have crawled from viewing the crawl stats screen. The recently added “URL Inspection Tool” in Google Search Console allows you to see crawl dates for specific URLs. However, the URL Inspection Tool alone is not enough to find what pages, directories, or templates the crawlers are crawling frequently.

URL Inspection Tool

For webmasters, it is essential to understand the crawl frequency of your site. Additionally, if the crawl frequency can be aggregated for each page, directory, and template, it will be very useful information for building site access improvement measures. If there are pages that you want crawled but have low crawl frequencies, action must be taken. Conversely, if there are pages that you don’t want crawled but have high crawl frequencies, different actions must be taken. Through raw logs, such as gathering the data in excel or Tableau, (if you can see the crawl frequency for pages, directors, and templates) it is possible to build the necessary measures.

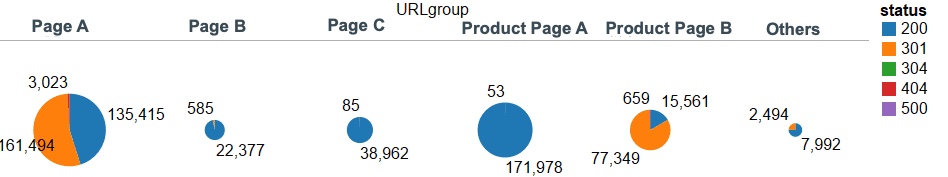

As an example, the following figure shows a graph in which the pages accessed by a Google crawler (googlebot) on a specific day are grouped by a template and the response codes returned by the server at that time using Tableau.

The bigger the pie chart, the more the template is crawled. As shown, list page A and detail page A have a large crawl volume. On the other hand, list page B and list page C have a low crawl volume. Depending on whether the status is desired or not, action items will change such as reviewing the XML sitemap or reviewing the link structure between pages, etc.

Again, with the help of raw logs, you can also check whether redirect settings are correctly implemented when a site is undergoing a renewal. In the previous graph, we know that for list page A and detail page B, 301 response codes are mostly returned. If those response codes are intentional, then you don’t have to worry. However, if they aren’t intentional, then some kind of adjustment is needed. If you do not have any raw logs available, then running a URL sampling check using crawling tools such as Screaming Frog is necessary. If you do have raw logs, thorough research can be done based on actual data.

Understand details for crawl errors

Raw logs can be helpful in understanding details for crawl errors. The “Index Coverage Report” in Google Search Console reports various crawl errors. Although it is not always the case that all errors reported must be actioned, due to crawler operations, the errors may eventually result in users being prevented from using the site. So, it is important to resolve errors early when they are large in number or concentrated on templates that appear important to the site (such as product detail pages on EC sites).

Index Coverage Report

However, even if you try to fix the errors, it may be difficult to find the cause. For example, “Submitted URL marked ‘noindex‘” would signal you to delete URLs that contain noindex tags from the sitemap or if the noindex tag is inserted by mistake, eliminate them. On the other hand, errors such as “Server error (5xx)” and “Submitted URL has crawl issue” may not be related to a problem with the HTML source, but there may be some problem on the server-side. In these cases, raw logs can be very useful sources of information.

A raw log contains information such as the date, time, URL, and response code that was sent when the crawler sent the request to the webserver. By doing an analysis on this information, it is possible to understand the details of the crawl errors. For example, when counting the crawler response code by time zone,

If 5xx response codes were concentrated on a specific date and time, you could infer that something went wrong during that time. Additionally, if an error occurs only at a specific URL, it can be inferred that the URL has an issue. If it was reported as an error in the index coverage report but there was no evidence that the crawler crawled the URL in the raw log, the server could not process the request from the crawler in the first place (for example, there was a DDoS attack from overseas and the server response was temporarily reduced).

It is not always guaranteed that the cause can be found from researching raw logs, but if you want more information than what is provided in Search Console, then I suggest you take a look at your raw logs first.

Can research how a website attack occurred and how to respond

This last section is not so relevant in terms of SEO but is a very serious problem for a website. Ongoing attacks on websites you manage (for example, F5 attacks that just reload pages or DDoS attacks that aim to bring down servers) can occur.

What would you do in the worst-case scenario of a backdoor being installed to your site (backdoor to enter the server) and the website has been tampered with?

If an attack happens, the best way to solve it is to rely on a specialist. There are many cybersecurity experts nowadays. Now that attacks are diversifying and are becoming more complex, there’s only so much you can do yourself. If the problem is serious, we recommend that you first consult with an expert.

In situations where you are going to get help from a specialist, you will often be asked to provide raw server logs. This is because raw logs often contain a clear trace of how the webserver was attacked and how the backdoor was installed.

The error log shown at the beginning of this post is a real error log that was recorded when our website was actually attacked by a certain overseas IP address. At this time, there was a large number of requests from a specific IP address and the server response dropped drastically. In such cases, blocking access from that IP address is a useful step. However, since attackers often have multiple IP addresses (or springboard servers), it is more important to prevent server security holes as a fundamental solution.

Raw logs are a useful source of information for security hole prevention. This is because you can see where the attack is focused. For example, if you find that a brute force attack on your login page is happening and the attacker is trying to break your password, changing the login page URL from the default one can be a useful countermeasure. Even in the unlikely event that a backdoor is installed, the trace of the file name may be saved in the raw log. You can then clean the server using that information as a clue and remove the backdoor completely.

Last thoughts

So far, we have explained the value of web server “raw logs”, especially in SEO. Raw logs may not be dealt with on a normal basis, however, it is a good idea to first take a look at them. By taking a look, you might notice something that you did not notice before.

Finally, if you are facing any SEO problems, including raw log analysis for SEO as I described in this post, Principle is happy to help!

Is your Search traffic declining, and don’t know where to start to fix it?

Principle’s SEO audit service is a complete website analysis that will identify your website’s issues. Click here to learn how Principle can unleash your website’s full potential with our SEO audit.

Sr. SEO Consultant

MA from University of Tokyo. After working for the Ministry of Education, Culture, Sports, Science, and Technology for 11 years, Dai worked with an email delivery system and website at an IT venture. Dai is now focused on technical SEO and deployment for growing companies from various industries.